Audio-visual 3D Tracker (AV3T)

Compact multi-sensor platforms are portable and thus desirable for robotics and personal-assistance tasks. However, compared to physically distributed sensors, the size of these platforms makes person tracking more difficult.

To address this challenge, we propose an Audio Visual 3D Tracker (AV3T) [1] for multi-speaker tracking in 3D, using the complementarity of audio and video signals captured from a small-size co-located sensing platform. The key points of the proposed algorithm are

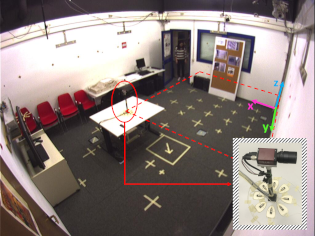

The following figure shows the recording environment of the CAV3D dataset, where the yellow markers on the ground are used to calibrate the corner cameras and the close-view of the co-located sensor (surrounded by the red ellipse) is at the bottom right of the picture. The camera is around 48 cm above the microphone array. The region covered by the camera’s Field of View is within the red dashed line. The world coordinates x, y, z are originated at the top-right room corner, which are marked as magenta, green and blue respectively.

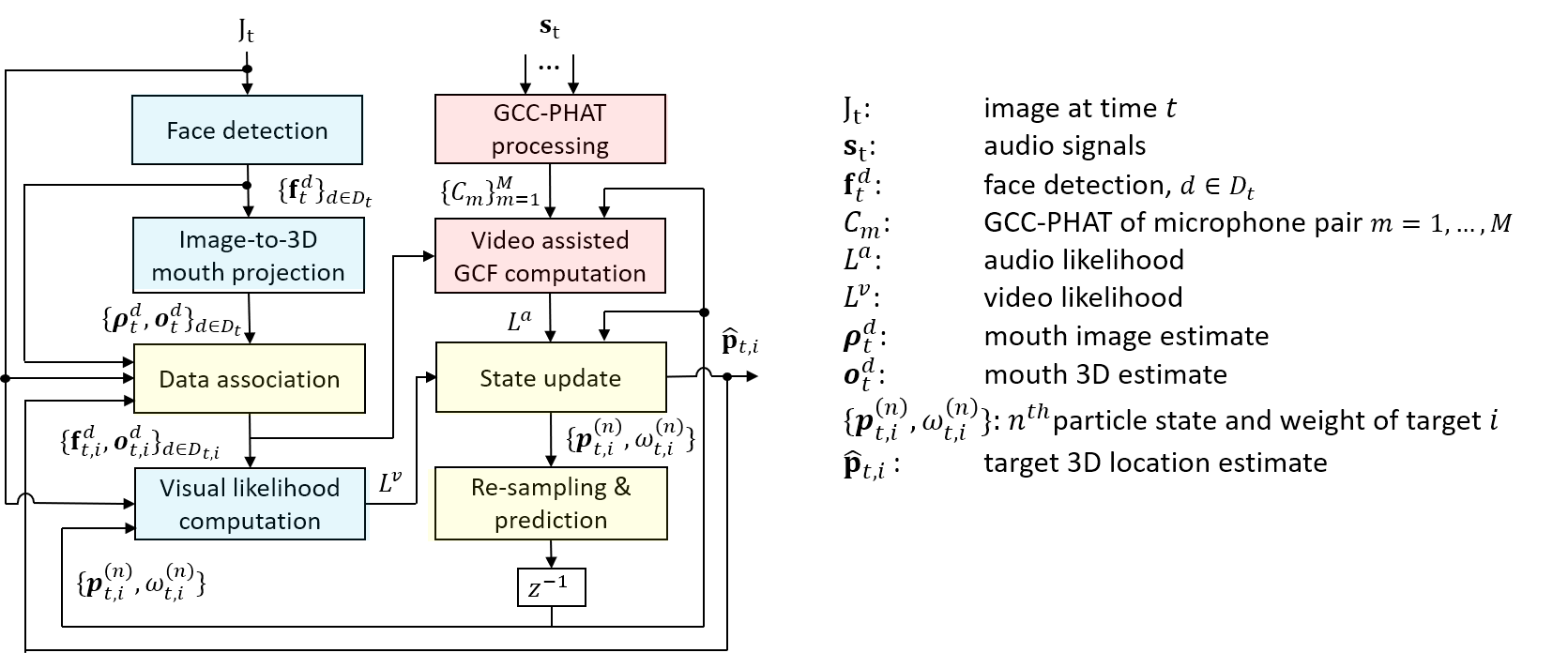

The block diagram of the proposed audio-visual 3D tracker (AV3T) is presented in the following figure, where the light blue blocks represent the computation of the visual likelihood, the light magenta blocks represent the computation of the audio likelihood, the light yellow blocks represent the audio-visual tracking and GCC-PHAT stands for Generalized Cross Correlation with PHAse Transform [3].

We evaluate our proposed AV3T tracker on

MATLAB source code [link]

[1] X. Qian, A. Brutti, O. Lanz, M. Omologo and A. Cavallaro, "Multi-speaker tracking from an audio-visual sensing device," in IEEE Transactions on Multimedia. doi: 10.1109/TMM.2019.2902489

[2] M. Omologo, P. Svaizer, and R. De Mori, “Acoustic transduction,” in Spoken Dialogue with Computer. Academic Press, 1998, ch. 2, pp. 1–46.

[3] C. Knapp and G. Carter, “The generalized correlation method for estimation of time delay,” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 24, no. 4, pp. 320–327, Aug 1976.

[4] G. Lathoud, J.-M. Odobez, and D. Gatica-Perez, “AV16.3: an audio-visual corpus for speaker localization and tracking,” in Machine Learning for Multimodal Interaction. Martigny, Switzerland: Springer, Jun 2004.